Videomenthe & ProConsultant Informatique become Bminty

Generative AI Whisper from Open AI

for transcription

10 May 2024

There's a lot of talk about generative AI in the world of professional video, particularly on the creative side: image production, advanced video retouching, adding effects, and so on.

But the emergence of generative artificial intelligence is also opening up new perspectives in the world of transcription, translation and subtitling! At the heart of this revolution is Whisper technology, which pushes back the boundaries of speech-to-text. Find out more in this article.

Whisper, the new ChatGPT for speech-to-text?

Lorem ipsum dolor sit amet, consectetur adipisicing elit, sed do eiusmod tempor incididunt ut labore et dolore magna aliqua. Ut enim ad minim veniam, quis nostrud exercitation ullamco laboris nisi ut aliquip ex ea commodo consequat. Duis aute irure dolor in reprehenderit in voluptate velit esse cillum dolore eu fugiat nulla pariatur. Excepteur sint occaecat cupidatat non proident, sunt in culpa qui officia deserunt mollit anim id est laborum.

LOREM IPSUM

Whisper uses generative models trained on a very large volume of data (680,000 hours of supervised multilingual and multitasking data) to produce accurate and natural subtitles, which come close to what a human could achieve.

What sets Whisper apart is its ability to understand the overall context of the audio and generate text that captures not only the words spoken, but also their meaning and intent. And that's just how powerful Whisper is!

For example, we put it to the test by feeding it an audio file with a sentence cut off in the middle. Well, Whisper finished the sentence for us (and it made sense!), whereas other transcription tools "just" transcribed the sentence word by word, and therefore stopped in the middle.

This approach enables Whisper to produce high-quality subtitles even in difficult audio conditions, such as the presence of various accents, background noise or technical or complex language.

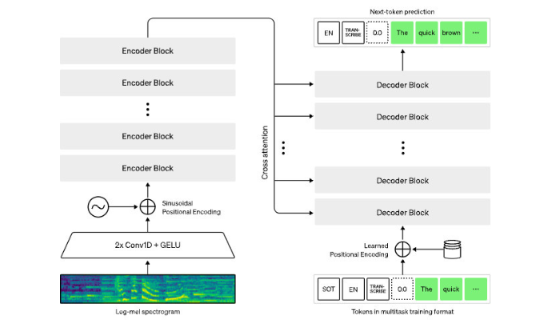

Whisper architecture

o Whisper uses a simple end-to-end architecture, in the form of an encoder-decoder Transformer.

o The workflow is as follows:

- Input audio is divided into 30-second segments.

- These segments are converted into log-Mel spectrograms which, simply put, mimic the way the human ear responds to different frequencies.

- They are then sent to an encoder.

- A decoder is trained to predict the corresponding text, with special tokens to perform tasks such as language identification, sentence-level timestamps, multilingual transcription and speech-to-English translation.

Whisper's current limitation

Whisper is a relatively new system, with a few limitations for the time being.

1. Performance compared with LibriSpeech

LibriSpeech is a corpus of almost 1000 hours of English speech read at a sampling rate of 16 kHz. This corpus is used for training models in automatic speech recognition (ASR).

Whisper has not been specifically trained to outperform LibriSpeech's specialized models, which perform very well on very specific topics.

However, when Whisper's performance is measured on a wide variety of datasets, it is much more robust and makes 50% fewer errors than these models.

2. Audio file size

Whisper can handle audio files of any length, as long as the size of the input file is less than 25 MB. For longer files, you can follow OpenAI's guidelines for long input.

3. Languages

- Whisper is mainly trained on English data (2/3 of the data). This may, for the time being, result in poorer support for some languages.

- While Whisper can transcribe into a very large number of languages, translation is currently only available into English.

How do I use Whisper?

For the time being, testing Whisper requires technical skills that we don't all have! Using it on your laptop requires you to install several tools (Python, PIP, Chocolatey, FFMPEG and, of course, Whisper), then execute command lines to convert your audio into text.

There is, however, a relatively accessible solution for those without the requisite technical skills. Google Colab (for Colaboratory), a NoCode platform created by Google, makes it easy to execute Python commands directly from a browser! If you are fluent in English, simply follow the instructions on this platform and generate a text transcript of an audio file.

Videomenthe, your partner for perfect subtitles

Subtitling requires linguistic and technical skills, as well as a good knowledge of the standards in force in each country and for each type of subtitling (e.g. SDH).

We offer subtitling solutions for the audiovisual industry and businesses:

- EoleCC, a collaborative platform for multilingual subtitling and SDH,

- a lab service, with subtitles reviewed by professional subtitlers and translators specialising in SDH